The Sapienza Natural Language Processing Group (Sapienza NLP), led by prof. Roberto Navigli, includes a large team of Ph.D. students and researchers which are part of the Computer, Control and Management Engineering Department and Computer Science Department of Sapienza University of Rome.

Our group aims at devising and developing innovative approaches to multilingual Natural Language Understanding. We pursue a vision focused on integrating explicit, symbolic knowledge with deep learning.

The group's work is financed by several sources of funding, including ERC grants, other EU and national projects, and the Babelscape Sapienza spin-off.

Announcing Minerva-7B: try it now!

Large Language Models (LLMs) are transforming how we interact with technology, but most are designed with English as their primary focus, often leaving other languages underserved. Enter Minerva, the first family of LLMs developed from scratch with a primary focus on the Italian language.

Sapienza NLP @ NAACL 2025

We're thrilled to present a paper in NAACL 2025! We've traveled to Albuquerque to present our paper on vocabulary adaptation.

Sapienza NLP @ EMNLP 2024

We are delighted to share that 3 papers from our group were accepted at EMNLP 2024, including an outstanding paper award winner! Catch us in Miami, Florida to learn more about machine translation meta-evaluation, commonsense QA and RAG through multilingual knowledge-graphs.

ACL 2024 Outstanding Paper Award!

We are excited to announce that our work "NounAtlas: Filling the Gap in Nominal Semantic Role Labeling" has been awarded as Outstanding paper at ACL 2024! Many congratulations to the authors Roberto Navigli, Marco Lo Pinto, Pasquale Silvestri, Dennis Rotondi, Simone Ciciliano and Alessandro Scirè.

Sapienza NLP @ ACL 2024

We are excited to share that we have 8 papers accepted at ACL 2024! We are in Bangkok to present our works about nominal semantic role labeling, machine translation meta evaluation, coreference resolution, summarization factuality evaluation, word sense disambiguation, joint relation extraction and entity linking, and semantic parsing.

Sapienza NLP @ NAACL 2024

We are glad to announce that we have 2 paper accepted at NAACL 2024! We are in Mexico City to present our works about concept and named entity recognition and semantically-annotated Wikipedia.

Sapienza NLP @ LREC-COLING 2024

We are glad to announce that we have 2 paper accepted at LREC-COLING 2024! We are in Torino to present our works about Latin WSD and homonymy disambiguation.

Minerva models are out!

📰 ANSA, Il Sole 24 Ore, 📺 RaiNews (Article & VIDEO)

We are excited to announce the launch of the Minerva models, the first family of Large Language Models (LLMs) pre-trained from scratch for the Italian language. This initiative, a collaboration with CINECA using the Leonardo supercomputer, is part of the strategic PNRR FAIR initiative, demonstrating a significant advancement in the field of generative AI in Italy. Leading this project are Professor Roberto Navigli, a recipient of two prestigious ERC grants and ACL fellow, along with our researchers, Edoardo Barba and Simone Conia. Additionally, PhD students Pere-Lluís Huguet Cabot, Riccardo Orlando, and Luca Moroni have played integral roles in advancing the project's research and development.

Sapienza NLP @ EACL 2024

Sapienza NLP is proud to present "CroCoAlign: A Cross-Lingual, Context-Aware and Fully-Neural Sentence Alignment System for Long Texts" at the main conference EACL 2024. This work presents a state-of-the-art approach for cross-lingual sentence alignment of long texts.

Roberto Navigli selected as ACL Fellow!

Prof. Roberto Navigli has just been selected by the Association for Computational Linguistics as an ACL Fellow! The Fellows program recognizes ACL members whose contributions to the field have been most extraordinary in terms of scientific and technical excellence, service to the association and the community and/or educational or outreach activities with broader impact.

Workshop on Training and Evaluation Data for Italian Large Language Models

This inaugural workshop, focusing on the development of Large Language Models (LLM) for the Italian language, marks the initial phase of constructing a Large Multimodal Model within the framework of the Transversal Project "Vision, Language, and Multimodal Challenges" as part of the big project "Future Artificial Intelligence Research" (FAIR).

Sapienza NLP @ EMNLP 2023

Sapienza NLP is proud to present "Code-Switching with Word Senses for Pretraining in Neural Machine Translation" at EMNLP 2023. This work focuses on the interaction between the tasks of Word Sense Disambiguation and Machine Translation.

Sapienza NLP @ CLiC-it 2023

Sapienza NLP will be at CLiC-it 2023 with 4 papers on entity disambiguation, the meaning of superhuman performances in NLU, multilingual relation extraction, and multilingual sentence alignment .

Sapienza NLP @ AACL 2023

LexicoMatic: Automatic Creation of Multilingual Lexical-Semantic Dictionaries has been accepted at AACL 2023!

Sapienza NLP @ ACL 2023

We are happy to announce that our group has 8 publications at ACL 2023! Our works cover a set of diverse topics such as descriptive language modeling, the meaning of superhuman performances in NLU, semantic parsing, book summarization, relation extraction, and AMR alignment .

Sapienza NLP @ EACL 2023

Entity Disambiguation with Entity Definitions has been accepted at EACL 2023!

Sapienza NLP @ EMNLP 2022

Sapienza NLP has 3 papers accepted at EMNLP 2022! Check out our works on Semantic Role Labeling with Definition Modeling, Machine Translation Evaluation, and Euphemism detection!

Sapienza NLP @ NAACL 2022

Sapienza NLP will be at NAACL with 3 papers! We will present our works on Named Entity Recognition, Entity Disambiguation, Idiomatic Expressions and biases in Neural Machine Translation.

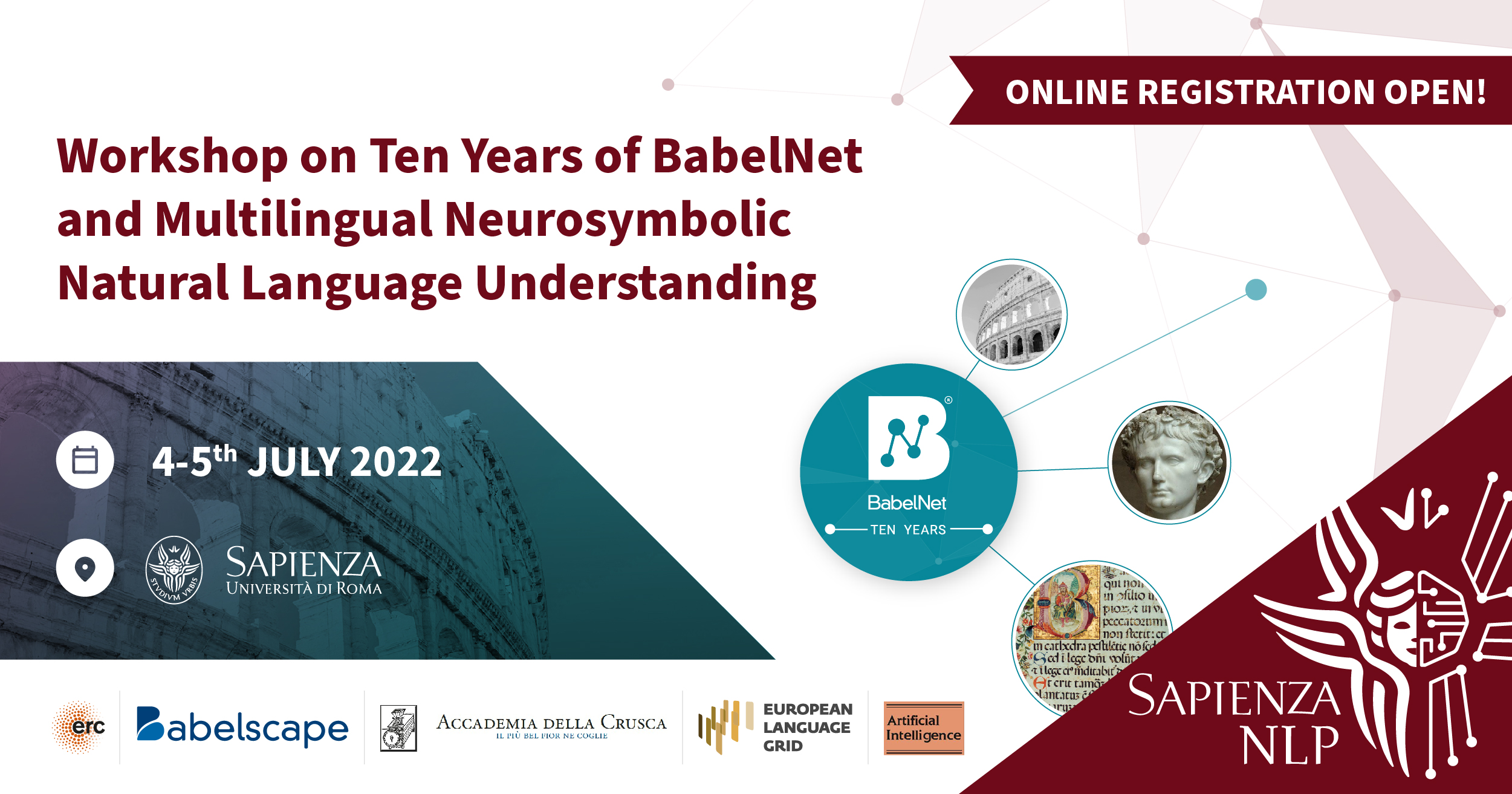

Workshop on Ten Years of BabelNet and Multilingual Neurosymbolic Natural Language Understanding

This 2-day workshop organized at Sapienza in the Department of Computer, Control and Management Engineering takes place after more than 10 years of research on multilingual Natural Language Understanding to celebrate the most far-reaching and novel multilingual dictionary and knowledge base, BabelNet, currently used by more than 1000 universities and research institutions, and discuss the future of neuro-symbolic approaches to Natural Language Understanding.

ACL 2022 Best Resource Paper Award!

We are thrilled to announce that our work “DiBiMT: A Novel Benchmark for Measuring Word Sense Disambiguation Biases in Machine Translation” has been awarded as ‘Best Resource Paper’ at ACL 2022! Many congratulations to the authors Niccolò Campolungo, Federico Martelli, Francesco Saina and Roberto Navigli!

SapienzaNLP @ ACL 2022

We are proud to announce that our group has 6 main conference publications at ACL 2022! Our works cover a set of diverse topics such as disambiguation biases in MT, structured emotion classification, probing language models, Word Sense and Entity Disambiguation. Furthermore, we released BabelNet Meaning Representation (BMR), our fully-semantic, language-agnostic representation formalism.

SapienzaNLP @ AAAI 2022

The Sapienza NLP group is proud to present two new papers at AAAI 2022! This time, we will showcase our work on semantic typing of events and visual definition modeling. Not only that, we will also introduce our "blue sky" idea on BabelNet Meaning Representation (BMR).

SapienzaNLP @ EMNLP 2021

We are extremely excited about EMNLP 2021, the first hybrid NLP conference in two years! We will be there with 11 papers on a varied range of topics from Word Sense Disambiguation to Lexical Substitution, Information Retrieval, Relation Extraction, Entity Linking and more!

SapienzaNLP @ IJCAI 2021

We are proud to announce that our group will be at IJCAI 2021 with 6 accepted papers: 4 in the main track and 2 surveys! Once again, our papers touch several areas of Semantics in NLP ranging from multilingual alignment to Word Sense Disambiguation, Semantic Role Labeling and Lexical Substitution.

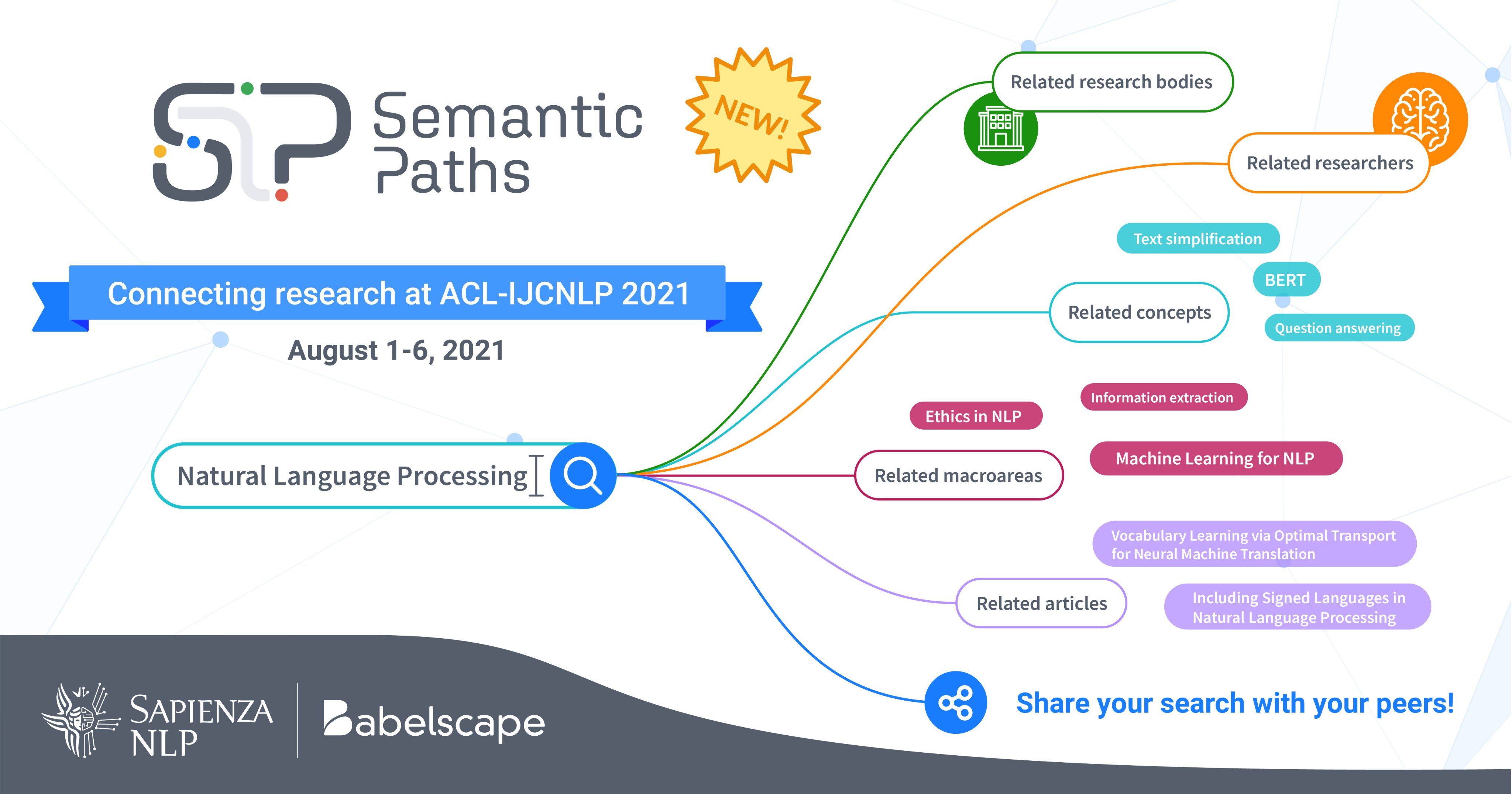

ACL 2021 is about to start!

Are you ready for ACL 2021? The Sapienza NLP group is proud to have our lab director, Prof. Roberto Navigli, as the Program Co-Chair and support this year's edition of ACL. Together with Babelscape, we present Semantic Paths, the easiest way to discover your new favorite papers and researchers!

SapienzaNLP @ NAACL 2021

Sapienza NLP will be at NAACL 2021 with 3 papers on semantics, ranging from Word Sense Disambiguation, cross-lingual Semantic Role Labeling (outstanding paper award!) and multilingual Semantic Parsing! Read more about them in our blog post.

SapienzaNLP @ EACL 2021

Sapienza NLP is at EACL 2021 with 1 short paper +1 long paper! Read more about our latest research on semantics here.

BabelNet 5 is out now!

Celebrate the 10th anniversary of BabelNet with us! New interface, up-to-date content in 500 languages, 20 million synsets, WordNet 2020, and much more! Check out the latest release of BabelNet at babelnet.org!

SapienzaNLP @ AAAI 2021

We are proud to share the latest developments from our lab at AAAI 2021! Read more about our newest papers on Semantic Parsing (Text-to-AMR and AMR-to-Text) and Multilingual WSD!

SapienzaNLP @ IJCAI 2020

Read more about the latest research presented at IJCAI 2020 from our group: from multilingual Word Sense Disambiguation to learning multilmodal (text + image) sense embeddings!

SapienzaNLP @ COLING 2020

The Sapienza NLP group is proud to present 2 papers at COLING 2020: "Bridging the Gap in Multilingual Semantic Role Labeling: a Language-Agnostic Approach" and "Conception: Multilingually-Enhanced, Human-Readable Concept Vector Representations".

SapienzaNLP @ EMNLP 2020

We are proud to announce that SapienzaNLP has 4 + 1 papers accepted at EMNLP 2020: 4 long papers at the main conference and 1 demo paper. Our contributions touch several areas from Word Sense Disambiguation to Semantic Parsing and Semantic Role Labeling.

SapienzaNLP @ ACL 2020

We are proud to announce that SapienzaNLP has 4 papers accepted at ACL: 2 long, 1 short, and a demo, making up almost half of the accepted papers from Italian institutions (4 out of 9). Our works lie in the areas of lexical semantics, multimodal representation learning and multilinguality.

VerbAtlas is now out!

We are proud to announce that VerbAtlas 1.0 (verbatlas.org) is finally available for download.

Developed at the Sapienza NLP group, the multilingual Natural Language Processing group at the Sapienza University of Rome, VerbAtlas is a novel large-scale manually-crafted semantic resource for wide-coverage, intelligible and scalable Semantic Role Labeling. The goal of VerbAtlas is to manually cluster WordNet synsets that share similar semantics into a set of semantically-coherent frames.

SyntagNet is now out!

We are proud to announce that SyntagNet 1.0 (http://syntagnet.org) is finally available for download. Developed at the Sapienza NLP group, the multilingual Natural Language Processing group at the Sapienza University of Rome, SyntagNet is a manually-curated large-scale lexical-semantic combination database which associates pairs of concepts with pairs of co-occurring words. The goal of SyntagNet is to capture sense distinctions evoked by syntagmatic relations, hence providing information which complements with the essentially paradigmatic knowledge shared by currently available Lexical Knowledge Bases.

Welcome to the all-new Sapienza NLP website!

We are proud to launch our all-new website! Redesigned from the ground up with modern standards in mind, the Sapienza NLP website will be the main showcase for our research.

Under the guidance of Prof. Roberto Navigli, the Sapienza NLP group has continuously grown over the last few years and now it features 4 post doctoral research fellows and 12 PhD students. Click "Read More" to know more about them and their research activity!